What Does It Mean to Regulate Legal AI?

In collaboration with the Minnesota State Bar Association’s AI Committee and my colleague Damien Riehl, we recently broke down some ideas relating to what we should think about when we’re talking about “regulating AI in legal.” This blog post shares those illustrations and text, which are also available on the Minnesota State Bar Association’s Artificial Intelligence Committee page.

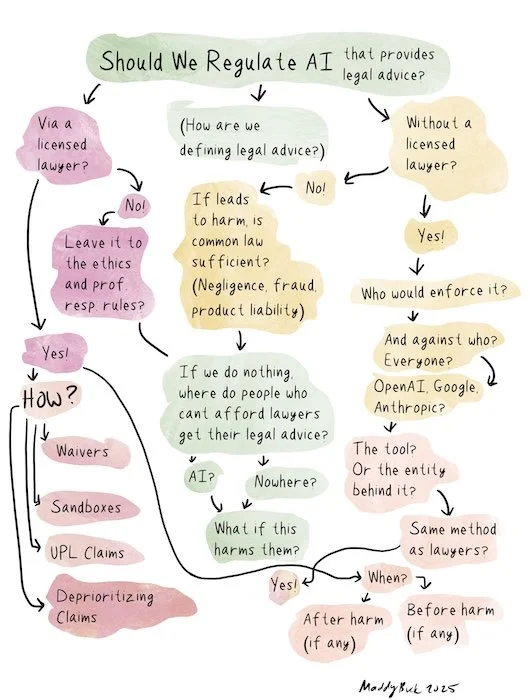

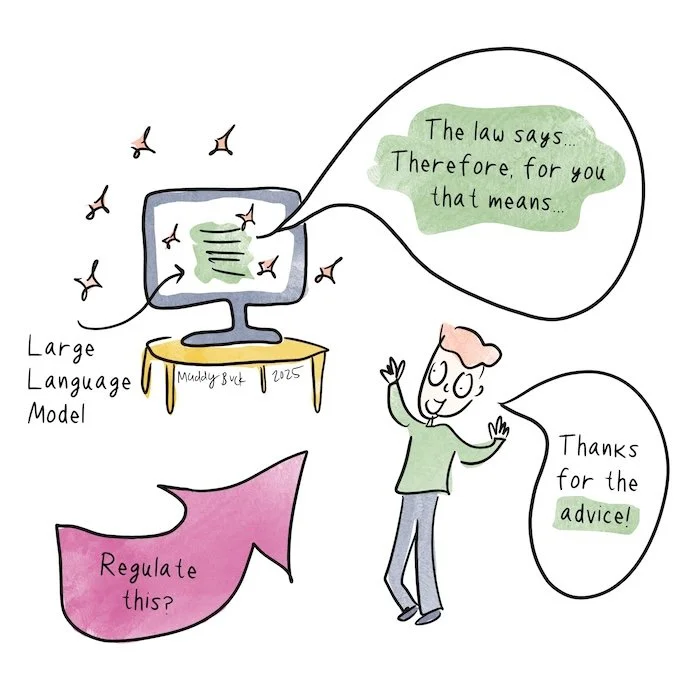

Legal advice is often described as “applying facts to law,” as something only licensed lawyers can give. But anyone can provide “legal information” like cases, statutes, and regulations—which also include many facts.

So can we know with certainty when someone or something provides “legal advice,” not “legal information?” Can an LLM apply facts to law, and provide “legal advice?” Courts and regulators haven’t given us clear answers here. Are clear answers even possible?

Should we regulate “legal advice” that comes from someone or something (an LLM) that is not a licensed lawyer? If we deem it “legal information,” will any resultant harm be addressed by common law claims (e.g., negligence, product liability, fraud)?

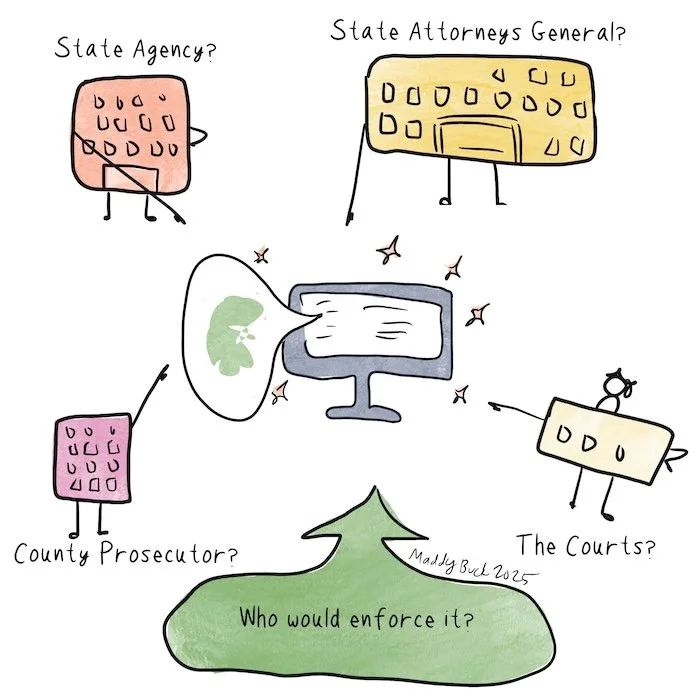

If we do regulate “legal advice” or “legal information” from someone or something that is not a licensed lawyer, who would enforce it? County prosecutors? State attorneys general? A state agency? The courts? And will it be enforced against everyone, including the giants like OpenAI, Google, Anthropic, etc.?

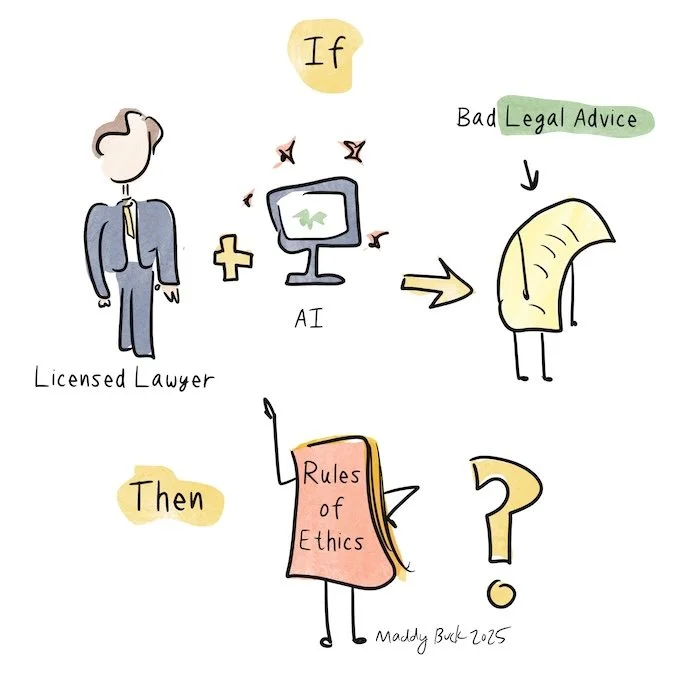

On the other hand, how do we regulate the use of AI by licensed attorneys? Do the rules of ethics and professional responsibility serve this purpose already?

Do we regulate the AI tool itself, or the entity building or operating the tool?

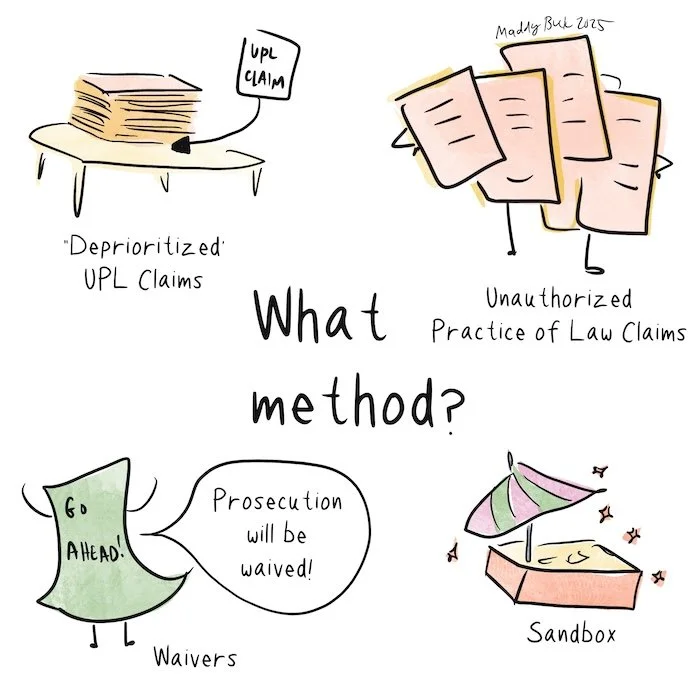

Whether we regulate attorneys using AI or anyone or anything other than attorneys for providing legal advice using AI, what method are we using? Unauthorized practice of law statutes? Waivers allowing certain entities to provide legal advice when they normally would risk prosecution? A sandbox where ideas can be explored? Prosecutors’ “de-prioritization” of UPL claims?

Do we regulate any of these up front (beforehand), or wait for a harm (if any) to appear?

Should lawyers who use AI be regulated in the same way as entities or LLMs that provide “legal advice” or “legal information” with AI?

So when we talk about “regulating AI use in legal,” which of these do we tackle?

Or do we tackle them all? Or none?

If we do nothing, where will people who can’t afford lawyers get their legal advice from? Will it be reliable? What if the LLM is more reliable than the average lawyer? What if the tool does them harm? If they get no help — from anyone or any AI — then what’s the resultant harm?

So when someone says “Let’s regulate legal AI,” it’s crucial to identify what we mean precisely.